AMD Expands AI Capabilities on Radeon GPUs with ROCm 6.1.3 and Radeon Software for Linux

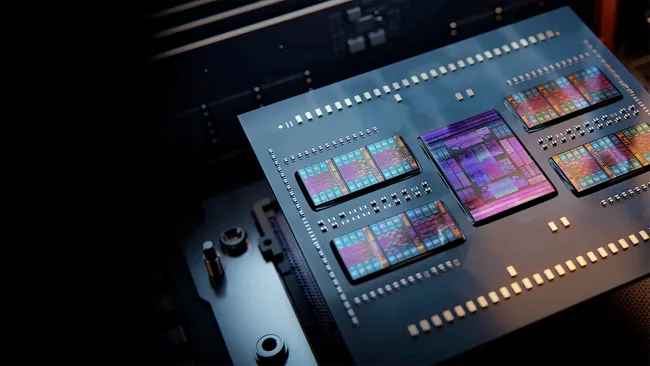

AMD is stepping up its game in AI development by extending support for machine learning (ML) tasks on its RDNA 3-based GPUs. With the release of Radeon Software for Linux 24.10.3 and ROCm 6.1.3, developers can now leverage Radeon RX 7000 and Radeon Pro W7000-series GPUs for AI tasks, avoiding the costs of cloud-based solutions. Traditionally, AMD’s AI/ML frameworks were limited to its CDNA-based Instinct accelerators, but this recent update allows RDNA 3 GPUs to perform AI tasks using popular frameworks like PyTorch, ONNX Runtime, and TensorFlow on local workstations.

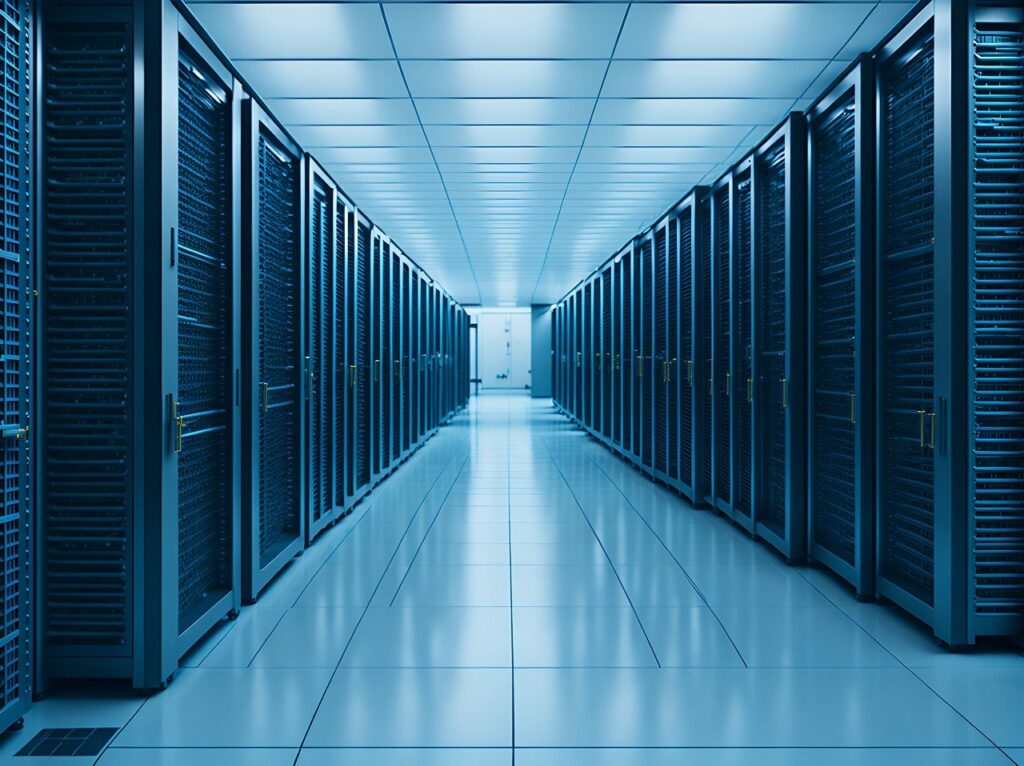

ROCm 6.1.3 offers enhanced AI functionality, including improved inference capabilities for ONNX Runtime with optimized processing in the INT8 data format via MIGraphX. The software stack also brings robust parallel computing power to desktop setups, creating a cost-effective alternative to high-cost cloud platforms. Furthermore, with compatibility across both RDNA 3 and CDNA architectures, developers can seamlessly transition applications from desktop to datacenter environments without changing frameworks.

While AMD has made significant strides in making its Radeon GPUs more appealing for AI development, it still lags behind its Instinct accelerators in terms of advanced features. ROCm 6.2, already available for Instinct APUs, includes more comprehensive support for features like FP8 and vLLM, which optimize multi-GPU workloads and reduce memory overhead. However, AMD’s push to enable AI on consumer GPUs marks a pivotal step in broadening access to machine learning tools and workflows.

As AI continues to grow in importance, AMD’s ongoing updates and expanding support signal a commitment to making Radeon GPUs a compelling option for developers looking to perform AI tasks without relying on cloud infrastructure.